ML Framework in O-RAN

Introduction

One of the key aspects of the Open RAN is to natively embed intelligence into the RAN. To this end, Artificial Intelligence (AI)/Machine Learning (ML) plays a crucial role in the process. Some of the goals for AI/ML within radio access networks are: decreasing the manual effort of going through large data amounts to diagnose issues and make decisions, or predicting the future to take proactive actions – thus saving time and cost. AI/ML-based algorithms may be used, e.g., in network security applications for anomaly detection, prediction of radio resources utilization, hardware failure prediction, parameters forecasting for energy-saving purposes, or conflict detection between xApps. This is being addressed from the beginning within O-RAN ALLIANCE.

In this post, we discuss the overall framework for machine learning within O-RAN, touching upon the architectural aspects related to Open RAN.

Note: If you are interested in the Open RAN concept, check this post. If you are interested in the details of O-RAN architecture, nodes, and interfaces, here is the relevant post.

ML Framework within O-RAN Architecture

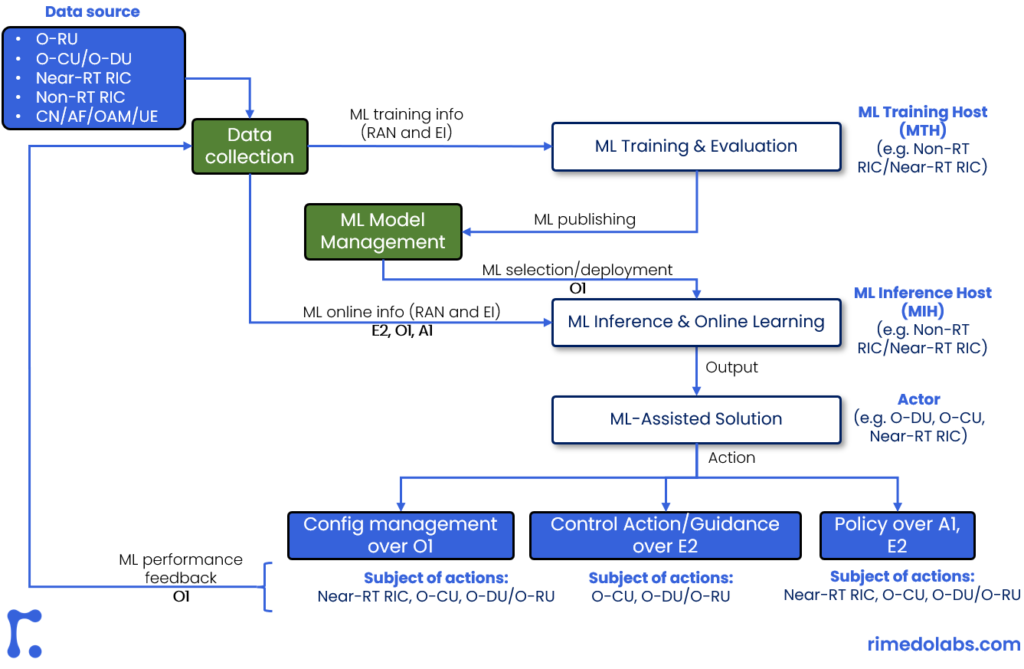

Fig. 1 below shows a simplified ML framework and a general procedure for the ML framework operation within O-RAN.

Data is being collected through O-RAN interfaces (like O1, E2, or A1) from, pretty much, all of the O-RAN entities, including the O-RU, O-DU, O-CU, Near- and Non-RT RIC, but also can come from a UE, Core Network (CN) or Application Functions (AF). The collected data can be e.g., regular Performance Measurements (PM), statistics, or Enrichment Information (EI).

This data is used by ML training and inference functions:

- ML Training Host (MTH), is a network function hosting the online and offline training of the model (typically Non-RT RIC is used for this purpose, but also Near-RT RIC in some scenarios).

- ML Inference Host (MIH), is also a network function hosting the ML model during inference mode including model execution and online learning (Non- or Near-RT RIC can be utilized here).

The inference host provides output to an Actor (i.e., an entity hosting an ML-assisted solution. In this case, it can be O-DU, O-CU, Non/Near-RT RIC). The Actor utilizes the results of ML Inference for the purpose of RAN performance optimization. Based on the decision, an action is taken on a Subject (i.e., an entity or function configured, controlled, or informed by the action).

After the action is taken, Subjects provide feedback serving as data sources for the next iteration. An important aspect of the whole framework is that any ML model needs to be trained and tested before deploying in the network (i.e., a completely untrained model will not be deployed in the network).

Based on the output of the ML model, an ML-assisted solution (i.e. a solution that addresses a specific use case using ML algorithms during operation) informs the Actor to take the necessary actions toward the Subject. These could include CM (Configuration Management) changes over O1, policy management over A1, or control actions or policies over E2, depending on the location of the ML inference host and Actor.

ML Training and Inference can be hosted within different entities in the O-RAN architecture, where the key elements are Non-RT RIC and Near-RT RIC, in the management plane and RAN domain, respectively. Those are defined within [O-RAN-ML] as deployment scenarios, e.g. Option 1 (Fig. 2a) MTH and MIH at Non-RT RIC, Option 2 (Fig. 2b) MTH at Non-RT RIC and MIH at Near-RT RIC, Option 3 (Fig. 2c) MTH outside of Non-RT RIC but part of SMO, MIH at Non-RT RIC.

Types of ML Algorithms and Actor Locations within O-RAN Architecture

ML algorithms are basically divided into three main groups:

- Supervised Learning (SL) – utilizes labeled datasets for either prediction of a given quantity based on the input (e.g., traffic load based on the daytime), or classification, i.e., assignment of the proper label to the input data (e.g., classification of a traffic load into the low, medium, high);

- Unsupervised Learning (UL) – deals with unlabeled datasets to discover hidden patterns, most of them are the clustering algorithms that can be used e.g., to analyze network coverage based on the RSRP reports;

- Reinforcement Learning (RL) – follows the concept of learning an agent how acts through the interaction with the environment, e.g., different traffic steering policies are being tested under various traffic load conditions to learn the agent which should be chosen.

The location of the ML model components, i.e., ML training and the ML inference for a use case mostly depends on the tradeoff between communication delay related to Option 1 and computational capabilities of Near-RT RIC – Option 2, and considered control loop (Non-RT RIC, Near-RT RIC, and RT). Moreover, the availability and quantity of data, available through different O-RAN interfaces should also be taken into account.

The below table provides a summary of how different ML algorithms can be deployed within O-RAN architecture (it is based on the input from [O-RAN-ML]).

| ML Algorithm type | Key concept | Algorithm examples | ML Training host (MTH) / ML Inference host (MIH) |

| Supervised learning | ML task aims to learn a mapping function from input to output given labeled data set | Linear regression, Logistic regression, KNN, CART, SVM, Naive Bayes, Extreme Gradient Boosting | Option 1: Non-RT RIC (MTH, MIH) Option 2: Non-RT RIC (MTH), Near-RT RIC (MIH) |

| Unsupervised learning | ML task aims to learn a function to describe a hidden structure from unlabeled data | K-means clustering, PCA | Option 1: Non-RT RIC (MTH, MIH) Option 2: Non-RT RIC (MTH), Near-RT RIC (MIH) |

| Reinforcement learning | Agent aims to optimize a long-term objective by interacting with the environment based on a trial and error process. | Q-learning, Multi-armed bandit learning, Deep-Q Network, SARSA, Temporal Difference learning, Deep deterministic policy gradient, Trust region policy optimization, Dyna-Q, Monte-Carlo tree search | Option 1: Non-RT RIC (MTH, MIH) Option 2: Non-RT RIC (MTH), Near-RT RIC (MIH) |

ML Models in O-RAN Use Cases

There are various application types within the scope of RAN optimization and value prediction. Some ML algorithm types are more suited to address one such problem in this area, while others are suitable for different ones. This mapping is being analyzed within O-RAN ALLIANCE from the perspective of specific use cases, like QoE optimization, Traffic Steering, QoE-based Traffic Steering, or V2X Handover Management (detailed use case definition can be found in [O-RAN-UC]).

Taking into account the types of ML algorithms and their suggested placement within O-RAN architecture, the below table provides examples of use cases as analyzed within O-RAN ALLIANCE along with the relevant ML algorithms types, deployment options, and input and output data with the functionality description.

| Use Case | AI/ML models functionality description | AI/ML algorithm types (example) | Training/Host Location | Data Input | Data Output |

| QoE optimization | Service type classification | Supervised learning (e.g. CNN, DNN) | MTH – Non-RT RIC MIH – Near-RT RIC | User traffic data | Service type |

| QoE optimization | KQI/QoE prediction (e.g. good, bad, video stall ratio, duration) | Supervised learning (e.g. LSTM, XGboost) | MTH – Non-RT RIC MIH – Near-RT RIC | Network data (L2 measurement report related to traffic pattern (throughput, latency, packets/s)), UE level radio channel info, mobility-related metrics, RAN protocol stack status (PDCP buffer status), cell level info (DL/UL PRB occupation ratio), APP data (video QoE score, delay staling, timestamps of stalling, etc) | KQI/QoE value (e.g. good/bad, stalling ratio, video at stalling duration, vMoS) |

| QoE optimization | Available radio BW prediction | Supervised learning (e.g. DNN) | MTH – Non-RT RIC MIH – Near-RT RIC | Network data, UE level radio channel info, mobility-related metrics, RAN protocol stack status, cell level info, APP data | Available radio bandwidth |

| Traffic steering | Cell load prediction/user traffic volume prediction | Supervised learning (time series prediction, e.g. SVR, DNN) | Option 1: MTH & MIH – Non-RT RIC Option 2: MTH – Non-RT RIC MIH – Near-RT RIC | Load-related counters, e.g. UL/DL PRB occupation | Load-related counters, e.g. UL/DL PRB occupation |

| Traffic steering | Radio fingerprint prediction | Supervised learning (e.g. SVR, GBDT) | MTH – Non-RT RIC MIH – Near-RT RIC | Intra-freq MR (measurement report) data and PM counters (e.g. RSRP, RSRQ, MCS, CQI) | Inter-frequency MR data (e.g. RSRP, RSRQ, MCS, CQI) |

Note: If you are interested in the elaboration of the O-RAN Use Cases and details on Traffic Steering, check out this blog post.

ML Model Lifecycle Implementation Example

Let’s now look at an example of ML model lifecycle implementation within the O-RAN architecture [O-RAN-ML]. Figure 3 below provides a high-level overview of the typical steps of AI/ML-based use case applications within O-RAN architecture considering Supervised Learning/Unsupervised Learning ML models.

First, the ML modeler uses a designer environment to create the initial ML model. The initial model is sent to training hosts for training. In this example, appropriate data sets are collected from the Near-RT RIC, O-CU, and O-DU to a data lake (i.e., a centralized repository to store and process large amounts of structured and unstructured data) and passed to the ML training hosts. It is important that the first phase of training is conducted offline (based on the cached data, and e.g. an accurate network simulator), even when considering the RL approach.

After the successful offline training, and extensive tests, the trained model is uploaded to the ML designer catalog, thus, the final ML model is composed.

Next, the ML model is published to Non-RT RIC along with the associated license and metadata. In this example, Non-RT RIC creates a containerized ML application containing the necessary model artifacts.

Following this, Non-RT RIC deploys the ML application to the Near-RT RIC, O-DU, and O-CU using the O1 interface. Policies for the ML models are also set using the A1 interface.

Finally, PM (Performance Measurement) data is sent back to ML training hosts from Near-RT RIC, O-DU, and O-CU for retraining.

Summary

Artificial Intelligence definitely plays an important role in Open RAN networks. Utilizing ML-based algorithms and ML-assisted solutions allows for reducing manual effort, predicting future behavior by observing trends (e.g., predicting low utilization of resources to switch off cells for energy-saving purposes), detecting anomalies (e.g., detecting network attacks), or improving the efficiency of the system (e.g., efficiently utilize radio resources). O-RAN ALLIANCE embeds natively AI/ML into the standardization works from the scratch. This includes the creation of a dedicated AI/ML framework and definition of respective entities and procedures for it; embedding the AI/ML-related functional blocks within the RAN Intelligent Controllers; defining the A1 interface elements dedicated to the provisioning of ML models and enrichment information; defining specific use cases which would utilize AI/ML-based solutions, etc. The standard documents cover multiple options for the actual ML model training and inference deployment, with Non-RT RIC and Near-RT RIC taking a significant role.

If you are interested in AI for O-RAN security, check out this blog post: AI for O-RAN Security – RIMEDO Labs.

If you are interested in ML-assisted solutions developed by our team:

- for energy efficiency, see: O-RAN Network Energy Saving: Cell Switching On/Off, or: O-RAN as an Enabler for Energy Efficiency in 5G Networks;

- for beamforming, see: Beamforming and Open RAN

References

[O-RAN-ML] O-RAN WG2, „AI/ML workflow description and requirements”, O-RAN.WG2.AIML-v01.03

[O-RAN-UC] O-RAN WG1, „O-RAN Use Cases Detailed Specification”, O-RAN.WG1.Use-Cases-Detailed-Specification-v09.00

Rimedo Resources

- Other O-RAN posts: Introduction to O-RAN: Concept and Entities, O-RAN Architecture, Nodes, and Interfaces, O-RAN Near-Real-Time RIC, O-RAN Use Cases: Traffic Steering

- O-RAN whitepapers: The O-RAN Whitepaper 2022 (RAN Intelligent Controller), The O-RAN Whitepaper 2021

- O-RAN products and services: O-RAN – RIMEDO Labs

- O-RAN slicing webinar Network Slicing in O-RAN (intelefy.com)

- O-RAN course O-RAN System Training (intelefy.com)

Acknowledgment

Many thanks to Marcin Hoffmann and Pawel Kryszkiewicz for their valuable comments and suggestions for improvements to this post.

Author Bio

Marcin Dryjanski received his Ph.D. (with distinction) from the Poznan University of Technology in September 2019. Over the past 12 years, Marcin served as an R&D engineer and consultant, technical trainer, technical leader, advisor, and board member. Marcin has been involved in 5G design since 2012 when he was a work-package leader in the FP7 5GNOW project. Since 2018, he is a Senior IEEE Member. He is a co-author of many articles on 5G and LTE-Advanced Pro and a co-author of the book „From LTE to LTE-Advanced Pro and 5G” (M. Rahnema, M. Dryjanski, Artech House 2017). From October 2014 to October 2017, he was an external advisor at Huawei Technologies Sweden AB, working on algorithms and architecture of the RAN network for LTE-Advanced Pro and 5G systems. Marcin is a co-founder of Grandmetric, where he served as a board member and wireless architect between 2015 and 2020. Currently, he serves as CEO and principal consultant at Rimedo Labs.